First test

This guide covers writing your very first test with Maia

Scenario

Let's assume you want to test a scenario where your customer asks your AI system what clothes to wear based on the current weather. You have the following requirements:

- You are using

Ollamaand its modelMistralfor all agents - In your system, you have two agents:

Alice, which is responsible for providing the weather conditionsBob, responsible for describing what to wear based on the weather

- Your AI agents should not use unprofessional words.

Test

Here is an example of a test that fulfills the above requirements:

import pytest

from maia_test_framework.testing.base import MaiaTest

from maia_test_framework.testing.assertions.content_patterns import assert_professional_tone

from maia_test_framework.providers.generic_lite_llm import GenericLiteLLMProvider

class TestContentAssertions(MaiaTest):

@pytest.mark.asyncio

async def test_conversation_direct_message(self):

self.create_agent(

name="Alice",

provider=GenericLiteLLMProvider(config={

"model": "ollama/mistral",

"api_base": "http://localhost:11434"

}),

system_message="You are a weather assistant."

)

self.create_agent(

name="Bob",

provider=GenericLiteLLMProvider(config={

"model": "ollama/mistral",

"api_base": "http://localhost:11434"

}),

system_message="You are an assistant who only suggests clothing."

)

session = self.create_session(

["Alice", "Bob"],

assertions=[assert_professional_tone],

)

await session.user_says("Please describe the usual weather in London in July, including temperature and conditions.")

await session.agent_responds('Alice')

assert_agent_participated(session, 'Alice')

await session.agent_responds('Bob')

assert_agent_participated(session, 'Bob')

Let's break it down now.

Agents

In the first few lines, you can see that you are creating agents. You have two agents in your system: Alice and Bob. Both use GenericLiteLLMProvider - this class gives you an abstraction over many popular models. You just need to pass the model name and API. The test is using a locally running Ollama, so we are using http://localhost:11434.

Bob and Alice also get a system message to describe their roles: Alice is responsible for providing the weather conditions, while Bob is responsible for suggesting clothing.

Session

A session establishes a communication channel. Before agents can talk to each other, we need to create a session and assign agents to it. The session is also used for assertions, so you can include built-in or custom assertion functions that will check every message for inappropriate content.

Note:

More info about session can be found here: Session

Messaging

After preparing a test, we can start the conversation! First, we simulate user activity by executing user_says. Then, we orchestrate the conversation by asking Alice to respond to this query and then asking Bob to respond to Alice's message. In the meantime, we are checking if they are really part of the conversation.

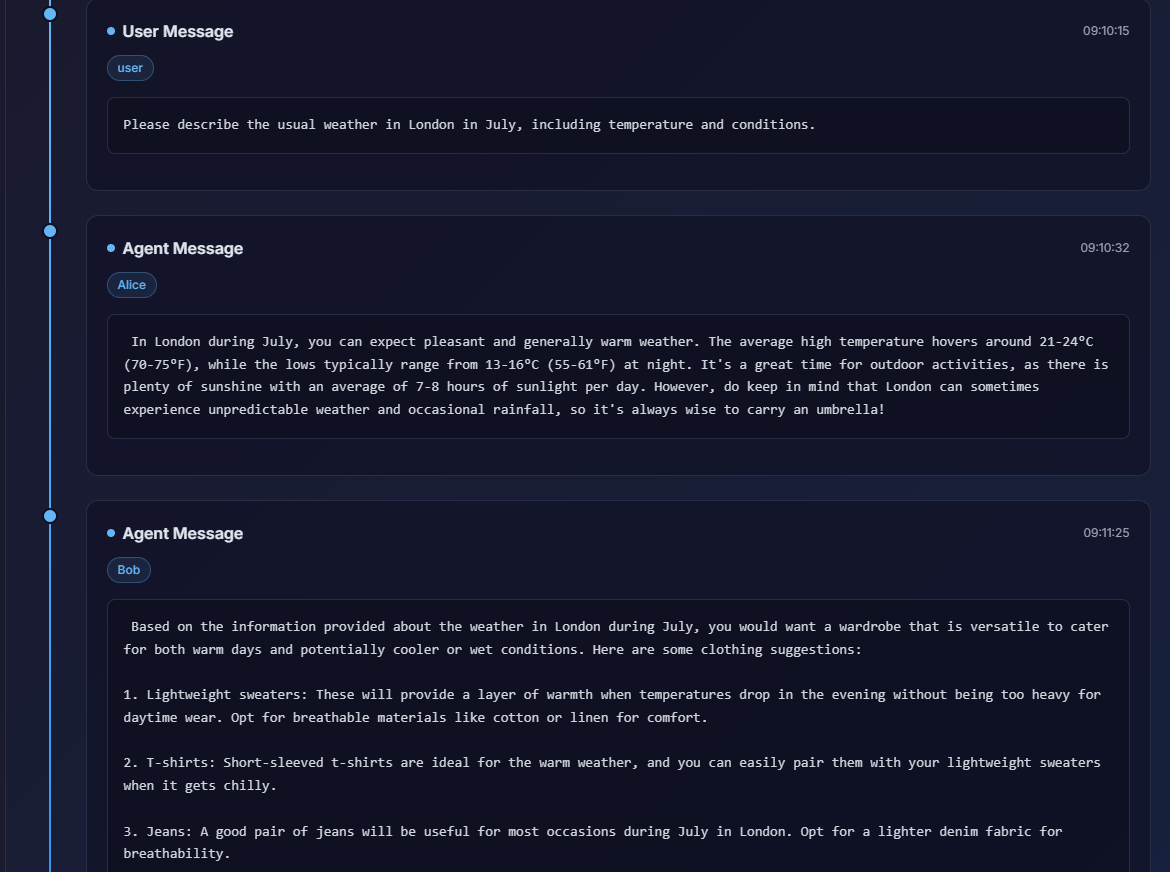

Visualizing

Note:

More info about visualization can be found here: Dashboard

After test execution, you can check all messages, durations, etc., in the dashboard. Here is the output of the above test:

Note:

And that's it! Your first test is working and checks the multi-agent AI system. More test examples can be found here: Basic Tests